Diffraction-casting-optical-computing-AI-applications

Diffraction Casting: Revolutionizing Optical Computing for Next-Gen AI Applications

Introduction to Optical Computing

- The Need for Powerful Computing Solutions: The growing complexity of applications like artificial intelligence demands increasingly powerful, energy-intensive computers. Optical computing offers a potential solution for boosting speed and efficiency, but challenges remain in its practical implementation.

Understanding Diffraction Casting

- What is Diffraction Casting?: Introducing diffraction casting--a new design framework that tackles the current drawbacks and introduces groundbreaking concepts to optical computing, enhancing its potential for next-generation devices.

Challenges of Traditional Electronic Computing

- Limitations of Current Technology: Whether it's your smartphone or laptop, today's computing devices are all built on electronic technology. Yet, this approach has its drawbacks-chief among them, substantial heat production as performance rises and the looming limits of current fabrication techniques.

As a result, scientists are pursuing alternative computational techniques that aim to overcome these limitations and, ideally, provide innovative features and advantages.

The Promise of Optical Computing

- Harnessing the Speed of Light: One potential solution lies in optical computing, a concept that has been around for decades but has yet to achieve commercial success.

Optical computing fundamentally harnesses the speed of light waves and their complex interactions with various optical materials, all without generating heat. Additionally, light waves can pass through materials simultaneously without interference, theoretically enabling a highly parallel, power-efficient, and high-speed computing system.

Historical Context: Shadow Casting in Optical Computing

- The Early Attempts: "During the 1980s, researchers in Japan examined a method of optical computing called shadow casting, which could carry out simple logical operations. Nonetheless, their approach utilized bulky geometric optical designs akin to the vacuum tubes used in early digital computing. Although these methods were theoretically sound, they lacked the necessary flexibility and integration ease for practical utility," explained Associate Professor Ryoichi Horisaki of the Information Photonics Lab at the University of Tokyo.

Advancements through Diffraction Casting

- Enhancing Optical Elements: We present an optical computing approach known as diffraction casting, which enhances the concept of shadow casting. While shadow casting relies on light rays interacting with various geometries, diffraction casting leverages the inherent properties of light waves. This results in more spatially efficient and functionally adaptable optical elements that can be extended as needed for universal computing applications.

Numerical Simulations and Results

- Testing the Framework: "We executed numerical simulations that demonstrated very favorable results, using small black-and-white images measuring 16 by 16 pixels, which are even smaller than the icons displayed on a smartphone."

An All-Optical System for Data Processing

- From Optical to Digital: Horisaki and his team suggest an all-optical system, meaning that is only converts the final output into an electronic and digital format; all preceding stages of the system operate entirely optically. Their research has been published in Advanced Photonics.

Application and Representation of Data

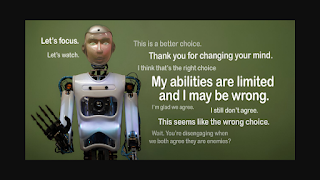

- Utilizing Images as Data Sources: Their concept involves utilizing an images as a data source, which naturally indicates that this system could be applied to image processing. However, it can also represent other types of data, particularly that utilized in machine learning systems, in graphical form, combining the source image with a series of additional images that depict stages in logic operations.

Layers in Optical Processing

- A Visual Analogy: Imagine it as layers in an image editing software like Adobe Photoshop; you begin with an input layer, which is the source image, and then additional layers can be added on top. These layers can obscure, manipulate, or transmit information from the layer below. The final output--the top layer--results from the processing of this combination of layers.

The Process of Diffraction Casting

- Creating Digital Images: In this context, light will pass through these layers, crating an image--hence the term 'casing' in diffraction casting---on a sensor. This image will subsequently be converted into digital data for storage or display to the user.

Future Potential and Commercial Viability

- An Auxiliary Component in Computing: "Diffraction casting represents merely one component in a theoretical computer founded on this principle. It may be more appropriate to view it as an auxiliary element rather than a complete substitute for existing systems, similar to how graphical processing units serve as specialized components for graphics, gaming and machine learning tasks," stated lead author Ryosuke Mashiko.

Estimating Time for Commercial Readiness

"I estimate that it will take approximately 10 years before this technology becomes commercially viable, as significant effort is required for physical implementation, which despite being based on solid research, has not yet been developed."

Conclusion: The Road Ahead for Diffraction Casting

- Expanding into Quantum Computing: "As this stage, we are able to showcase the applicability of diffraction casting in carrying out the 16 essential logic operations foundational to much information processing. Moreover, our system has the potential to evolve into the burgeoning area of quantum computing, which transcends conventional methods. The future will determine the results."

Labels: artificial intelligence, Diffraction Casting, Optical Computing, Quantum Computing, Tech Innovation, technology